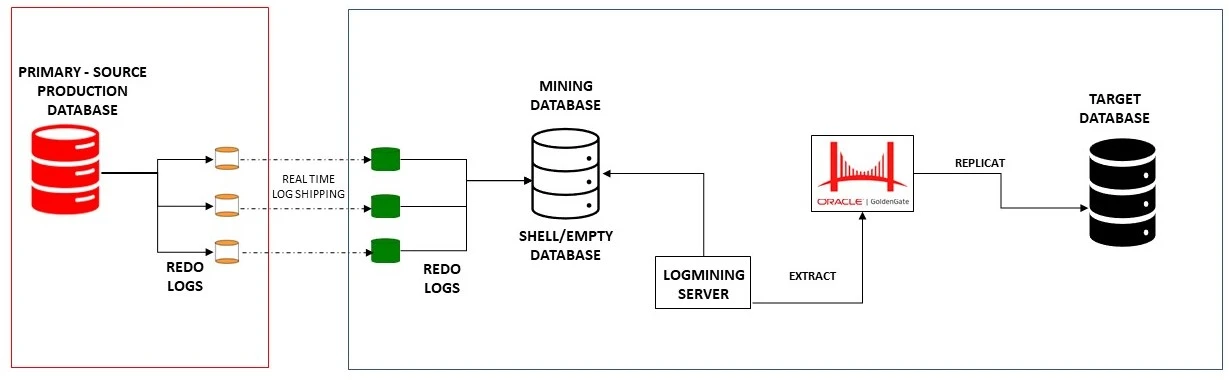

GoldenGate DownStream Capture : To offload Source Database load

In many cases, companies prefer not to use primary production database for the downstream layer to extract data for integration or reporting purposes. Instead, they want the database to be connected solely for their core critical applications. In some other scenarios access to primary production databases is not available, such as in the case of SaaS or application vendor’s databases and many more.

To mitigate the issues or address the challenges and enable real-time data extraction, a GoldenGate downstream capture architecture has been designed. With GoldenGate Downstream deployment, you can transfer the burden of extracting data from the source database to an intermediate or target server. This design involves extracting data from different databases, commonly referred to as Mining Database.

As part of a downstream deployment, the source database and mining database are separate databases, while the Logmining server is located in the downstream database. Logs are shipped from the source database to the downstream database over the network using redo transport (similar to Data Guard).GoldenGate extracts changes from redo logs (or archive files) using Logmining servers in the downstream database. Mining Database is just a shell/empty database. Like DataGuard the redo logs are not applied to the shell database, Mining database just receives the redo log streams from primary source database.

Real-time mining can only be set up for a single source database on a downstream database server at any given point in time. If the implementation involves replicating more than one source database, the archive log mode of the downstream capture must be configured. Archive log mode of downstream is not a real time capture.

This article provides detailed step-by-step instructions on how to transmit real-time redo log data to a downstream Mining Database.

PDB NAME : PDB1

MINING DB NAME : MINDB

TARGET DB NAME : TGTDB

All the 3 databases are running on 19c

SQL> SELECT supplemental_log_data_min, force_logging FROM v$database;

If the result is YES, the database meets the Oracle GoldenGate requirement.

If the result is NO,

SQL> ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

ENABLE_GOLDENGATE_REPLICATION PARAMETER

SQL> alter system set enable_goldengate_replication=true scope=both sid=’*’;

SQL> show parameter ENABLE_GOLDENGATE_REPLICATION

Value should be True.

CREATE USERS AND TABLESPACES IN CDB+PDB

SQL> create tablespace TBS_GGS datafile ‘+DATAC1’ size 500m;

Tablespace created.

SQL> create user c##ggadmin identified by “Password_123” default tablespace TBS_GGS temporary tablespace temp;

User created.

SQL> alter user c##ggadmin quota unlimited on TBS_GGS;

User altered.

SQL> alter user c##ggadmin identified by “Password_123”;

User altered.

SQL> exec dbms_goldengate_auth.grant_admin_privilege(‘c##ggadmin’,container=>’all’);

PL/SQL procedure successfully completed.

SQL> show pdbs

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

SQL> alter session set container=PDB1;

Session altered.

SQL> create tablespace TBS_GGS datafile ‘+DATAC1’ size 500m;

Tablespace created.

SQL> create user c##ggadmin identified by “Password_123” default tablespace TBS_GGS temporary tablespace temp;

User created.

SQL> alter user c##ggadmin quota unlimited on TBS_GGS;

User altered.

SQL> alter user c##ggadmin identified by “Password_123”;

User altered.

GRANT PRIVILEGES in CDB + PDB

grant connect,resource to c##ggadmin;

grant create session to c##ggadmin;

grant select any dictionary, select any table,SELECT ANY TRANSACTION to c##ggadmin;

grant create table, alter any table to c##ggadmin;

grant flashback any table to c##ggadmin;

grant execute on dbms_flashback to c##ggadmin;

grant execute on utl_file to c##ggadmin;

grant select on system.logmnr_session$ to c##ggadmin;

CHECK CONNECTIVITY

sqlplus /nolog connect c##ggadmin/Pasword_124@CONNECTION_STRING

SOURCE DATABASE CONFIG

SQL> show parameter LOG_ARCHIVE_CONFIG

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

log_archive_config string

SQL> Alter system set LOG_ARCHIVE_CONFIG='DG_CONFIG=(PRIMDB, MINDB)' scope=both sid='*';

System altered.

SQL> show parameter LOG_ARCHIVE_CONFIG

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

log_archive_config string DG_CONFIG=(PRIMDB, MINDB)

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

SQL> alter session set container=PDB1;

Session altered.

SQL> ALTER PLUGGABLE DATABASE ADD SUPPLEMENTAL LOG DATA;

Pluggable database altered.

If source DB is not using DB Doman, make sure to turn it off on Mining DB too.

Check the Mining Database config section for more information.

COPY THE PASSWORD FILE FROM SOURCE TO MINING DATABASE

12c: Data Guard Physical Standby – Managing password files in a RAC Physical Standby (Doc ID 1984091.1)

Test the connectivity between source & Mining Database using SYS account & TNSPING.

From Mining Database :

[oracle@admin]$ sqlplus sys@MINDB as sysdba

SQL*Plus: Release 19.0.0.0.0 – Production on Wed Nov 23 12:38:41 2022

Version 19.17.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Enter password:

Last Successful login time: Wed Nov 23 2022 12:37:36 -08:00

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 – Production

Version 19.17.0.0.0

SQL>

[oracle@admin]$ sqlplus sys@PRIMDB as sysdba

SQL*Plus: Release 19.0.0.0.0 – Production on Wed Nov 23 13:02:09 2022

Version 19.17.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Enter password:

Last Successful login time: Wed Nov 23 2022 12:38:50 -08:00

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 – Production

Version 19.17.0.0.0

SQL>

CONNECTION SUCCESSFUL

From Source Database :

[oracle@npxdb-urbxq1 admin]$ sqlplus sys@PRIMDB as sysdba

SQL*Plus: Release 19.0.0.0.0 – Production on Wed Nov 23 12:38:41 2022

Version 19.17.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Enter password:

Last Successful login time: Wed Nov 23 2022 12:37:36 -08:00

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 – Production

Version 19.17.0.0.0

SQL>

[oracle@npxdb-urbxq1 admin]$ sqlplus sys@MINDB as sysdba

SQL*Plus: Release 19.0.0.0.0 – Production on Wed Nov 23 13:02:09 2022

Version 19.17.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Enter password:

Last Successful login time: Wed Nov 23 2022 12:38:50 -08:00

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 – Production

Version 19.17.0.0.0

SQL>

CONNECTION SUCCESSFUL

SQL> Alter system set LOG_ARCHIVE_CONFIG='DG_CONFIG=(PRIMDB, MINDB)' scope=both sid='*';

System altered.

SQL> show parameter domain

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

db_domain string dbc.idnpd.oraclevcn.com

SQL> show parameter global_name

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

global_names boolean TRUE

SQL> select * from global_name;

GLOBAL_NAME

--------------------------------------------------------------------------------

MINDB.DBC.IDNPD.ORACLEVCN.COM dbc.idnpd.oraclevcn.com

TURN off this parameter.

SQL> alter system set db_domain='' scope =spfile sid='*';

System altered.

SQL> UPDATE GLOBAL_NAME SET GLOBAL_NAME ='MINDB';

1 row updated.

SQL> commit;

Commit complete.

SQL> alter system set global_names=FALSE scope=both sid='*';

System altered.

UPDATE THE SERVICE_NAMES PARAMETER

SQL> alter system set service_names='MINDB' scope=both sid='*';

System altered.

Modify the TNS Entries to the correct service names.

MINDB=

(DESCRIPTION=

(ADDRESS=

(PROTOCOL= TCP)

(HOST= host-prod-scan.dbc.idnpd.oraclevcn.com)

(PORT= 1521))

(CONNECT_DATA=

(SERVER= DEDICATED)

(SERVICE_NAME=QAMINED)))

Reboot the database.

srvctl stop database -d MINDB

srvctl start database -d MINDB

LOG SHIPPING PARAMETERS ON SOURCE DATABASE

SQL> show parameter log_archive_dest_state_3

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

log_archive_dest_state_3 string enable

SQL> alter system set log_archive_dest_state_3=DEFER;

System altered.

SQL> Alter system set LOG_ARCHIVE_DEST_3='SERVICE=MINDB ASYNC NOREGISTER VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) REOPEN=10 DB_UNIQUE_NAME=MINDB' scope=both sid='*';

System altered.

SQL> show parameter LOG_ARCHIVE_DEST_3

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

log_archive_dest_3 string SERVICE=MINDB ASYNC NOREGIST

ER VALID_FOR=(ONLINE_LOGFILES,

PRIMARY_ROLE) REOPEN=10 DB_UNI

QUE_NAME=MINDB

Check the REDO LOG SIZE

SQL>

set pagesize 5000

set lines 200

column REDOLOG_FILE_NAME format a60

SELECT

a.GROUP#,

a.THREAD#,

a.SEQUENCE#,

a.ARCHIVED,

a.STATUS,

b.MEMBER AS REDOLOG_FILE_NAME,

(a.BYTES/1024/1024) AS SIZE_MB

FROM v$log a

JOIN v$logfile b ON a.Group#=b.Group#

ORDER BY a.GROUP#;

GROUP# THREAD# SEQUENCE# ARC STATUS REDOLOG_FILE_NAME SIZE_MB

---------- ---------- ---------- --- ---------------- -------------------------------------------------- ----------

12 1 106 NO CURRENT +DATAC1/PRIMDB/ONLINELOG/group_12.4132.1121368637 10240

13 1 102 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_13.4133.1121368637 10240

14 1 103 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_14.4134.1121368637 10240

15 1 104 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_15.4135.1121368637 10240

16 1 105 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_16.4136.1121368637 10240

22 2 101 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_22.2846.1121368265 10240

23 2 102 NO CURRENT +DATAC1/PRIMDB/ONLINELOG/group_23.4128.1121368269 10240

24 2 99 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_24.4129.1121368275 10240

25 2 100 YES INACTIVE +DATAC1/PRIMDB/ONLINELOG/group_25.4130.1121368279 10240

26 2 0 YES UNUSED +DATAC1/PRIMDB/ONLINELOG/group_26.4176.1121528989 10240

10 rows selected.

LOG SHIPPING PARAMETERS ON MINING DATABASE

Resize REDO log group in Mining DB exactly like Source DB

THREAD 1 :

SQL> alter database add logfile thread 1 group 101 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 1 group 102 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 1 group 103 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 1 group 104 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 1 group 105 '+DATAC1' size 10240M;

Database altered.

THREAD 2 :

SQL> alter database add logfile thread 2 group 201 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 2 group 202 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 2 group 203 '+DATAC1' size 10240M;

Database altered.

SQL> alter database add logfile thread 2 group 204 '+DATAC1' size 10240M;

Database altered.

Drop the old Redolog group & Thread

SQL> alter database drop logfile group 2;

Database altered.

SQL> alter database drop logfile group 3;

Database altered.

SQL> alter database drop logfile group 4;

Database altered.

SQL> alter database drop logfile group 5;

Database altered.

SQL> alter database drop logfile group 7;

Database altered.

SQL> alter database drop logfile group 8;

Database altered.

Now the REDO Logs on MINING DB is exactly the same as Source DB.

GROUP# THREAD# SEQUENCE# ARC STATUS REDOLOG_FILE_NAME SIZE_MB

---------- ---------- ---------- --- ---------------- ------------------------------------------------------------ ----------

101 1 10 YES INACTIVE +DATAC1/MINDB/ONLINELOG/group_101.4196.1121528385 10240

102 1 11 NO CURRENT +DATAC1/MINDB/ONLINELOG/group_102.4197.1121528395 10240

103 1 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_103.4198.1121528401 10240

104 1 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_104.4199.1121528411 10240

105 1 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_105.4200.1121528421 10240

201 2 7 NO CURRENT +DATAC1/MINDB/ONLINELOG/group_201.4201.1121528583 10240

202 2 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_202.4202.1121528591 10240

203 2 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_203.4203.1121528599 10240

204 2 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_204.4204.1121528609 10240

205 2 0 YES UNUSED +DATAC1/MINDB/ONLINELOG/group_205.4175.1121529059 10240

10 rows selected.

ADD STANDBY REDO LOGS to Mining Database :

Note -> Create Standby redo log files (same size as online redo log files and number of groups should be one greater than existing online redo log groups)

alter database add standby logfile thread 1 group 301 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 1 group 302 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 1 group 303 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 1 group 304 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 1 group 305 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 1 group 306 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 401 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 402 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 403 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 404 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 405 '+DATAC1' size 10240M reuse;

alter database add standby logfile thread 2 group 406 '+DATAC1' size 10240M reuse;

check Standby-Redo log size

set pagesize 5000

set lines 200

column REDOLOG_FILE_NAME format a60

SELECT

a.GROUP#,

a.THREAD#,

a.SEQUENCE#,

a.ARCHIVED,

a.STATUS,

b.MEMBER AS REDOLOG_FILE_NAME,

(a.BYTES/1024/1024) AS SIZE_MB

FROM v$standby_log a

JOIN v$logfile b ON a.Group#=b.Group#

ORDER BY a.GROUP#;

GROUP# THREAD# SEQUENCE# ARC STATUS REDOLOG_FILE_NAME SIZE_MB

---------- ---------- ---------- --- ---------- ------------------------------------------------------------ ----------

301 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_301.4174.1121617673 10240

302 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_302.4163.1121617711 10240

303 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_303.4161.1121617715 10240

304 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_304.4166.1121617721 10240

305 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_305.4165.1121617727 10240

306 1 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_306.4164.1121617733 10240

401 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_401.4205.1121617817 10240

402 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_402.4206.1121617823 10240

403 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_403.4207.1121617829 10240

404 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_404.4208.1121617835 10240

405 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_405.4209.1121617839 10240

406 2 0 YES UNASSIGNED +DATAC1/MINDB/ONLINELOG/group_406.4210.1121617845 10240

12 rows selected.

SQL> alter system set LOG_ARCHIVE_DEST_2='LOCATION=+RECOC1 VALID_FOR=(STANDBY_LOGFILES, ALL_ROLES)' scope=both;

System altered.

ENABLE LOG_ARCHIVE_DEST_STATE_3 ON SOURCE DATABASE

SQL> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_3=ENABLE;

System altered.

SQL> show parameter log_archive_dest_state_3

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

log_archive_dest_state_3 string ENABLE

SWITCH THE LOGFILE SOURCE DATABASE : Try with multiple switches.

SQL> ALTER SYSTEM SWITCH ALL LOGFILE;

System altered.

ALTER SYSTEM SWITCH ALL LOGFILE start (PRIMDB)

2022-11-24T16:55:35.307525-08:00

Thread 1 advanced to log sequence 158 (LGWR switch), current SCN: 10368398650198

2022-11-24T16:55:35.307547-08:00

ALTER SYSTEM SWITCH ALL LOGFILE complete (PRIMDB)

Current log# 14 seq# 158 mem# 0: +DATAC1/PRIMDB/ONLINELOG/group_14.4134.1121368637

2022-11-24T16:55:35.345364-08:00

ARC0 (PID:298230): Archived Log entry 308 added for T-1.S-157 ID 0x4c46f004 LAD:1

2022-11-24T16:55:37.459684-08:00

rfs (PID:277677): krsr_rfs_atc: Identified database type as 'PRIMARY': Client is ASYNC (PID:267063)

2022-11-24T16:55:37.780622-08:00

rfs (PID:277677): Opened log for T-1.S-158 dbid 1279761413 branch 1121368635

2022-11-24T16:55:38.779370-08:00

rfs (PID:277799): krsr_rfs_atc: Identified database type as 'PRIMARY': Client is ASYNC (PID:133494)

2022-11-24T16:55:38.916390-08:00

rfs (PID:277799): Opened log for T-2.S-153 dbid 1279761413 branch 1121368635

SQL> select max(sequence#) from v$log_history where THREAD#=1;

MAX(SEQUENCE#)

--------------

158

SQL> select max(sequence#) from v$log_history where THREAD#=2;

MAX(SEQUENCE#)

--------------

153

ASMCMD> pwd

+RECOC1/MINDB/ARCHIVELOG/2022_11_24

ASMCMD> ls -l

Type Redund Striped Time Sys Name

ARCHIVELOG HIGH COARSE NOV 24 16:00:00 Y thread_1_seq_158.1199.1121619337

ARCHIVELOG HIGH COARSE NOV 24 16:00:00 Y thread_2_seq_153.1619.1121619339

- At this point REDO Streaming will be from Source to Mining Database.

- Extracts will read the real time REDO Logs through LOGMINER.

NOTE : You can find the complete oracle documentation here

Hope you found this article useful.

Disclaimer: The views expressed on this document are my own and do not necessarily reflect the views of Oracle..